This week I'm talking with my open source students about bugs. Above all, I want them to learn how The Bug is the unit of work of open source. Learning to orient your software practice around the idea that we incrementally improve an existing piece of code (or a new one) by filing, discussing, fixing, and landing bugs is an important step. Doing so makes a number of things possible:

- it socializes us to the fact that software is inherently buggy: all code has bugs, whether we are aware of them yet or not. Ideally this leads to an increased level of humility

- it allows us to ship something now that's good enough, and improve it as we go forward. This is in contrast to the idea that we'll wait until things are done or "correct."

- it provides an interface between the users and creators of software, where we can interact outside purely economic relationships (e.g., buying/selling).

- connected with the above, it enables a culture of participation. Understanding how this culture works provides opportunities to become involved.

One of the ways that new people can participate in open source projects is through Triaging existing bugs: testing if a bug (still) exists or not, connecting the right people to it, providing more context, etc.

As I teach these ideas this week, I thought I'd work on triaging a bug in a project I haven't touched before. When you're first starting out in open source, the process can be very intimidating and mysterious. Often I find my students look at what goes on in these projects as something they do vs. something I could do. It almost never feels like you have enough knowledge or skill to jump in and join the current developers, who all seem to know so much.

The reality is much more mundane. The magic you see other developers doing turns out to be indistinguishable from trial and error, copy/pasting, asking questions, and failing more than you succeed. It's easy to confuse the end result of what someone else does with the process you'd need to undergo if you wanted to do the same.

Let me prove it too you: let's go triage a bug.

TensorFlow and TensorBoard

One of the projects that's trending right now on GitHub is Google's open source AI and Machine Learning framework, TensorFlow. I've been using TensorFlow in a personal project this year to do real-time image classification from video feeds, and it's been amazing to work with and learn. There's a great overview video of the kinds of things Google and others are doing with TensorFlow to automate all kinds of things on the tensorflow.org web site, along with API docs, tutorials, etc.

TensorFlow is just under 1 million lines of C++ and Python, and has over 1,100 contributors. I've found the quality of the docs and tools to be first class, especially for someone new to AI/ML like myself.

One of those high quality tools is TensorBoard.

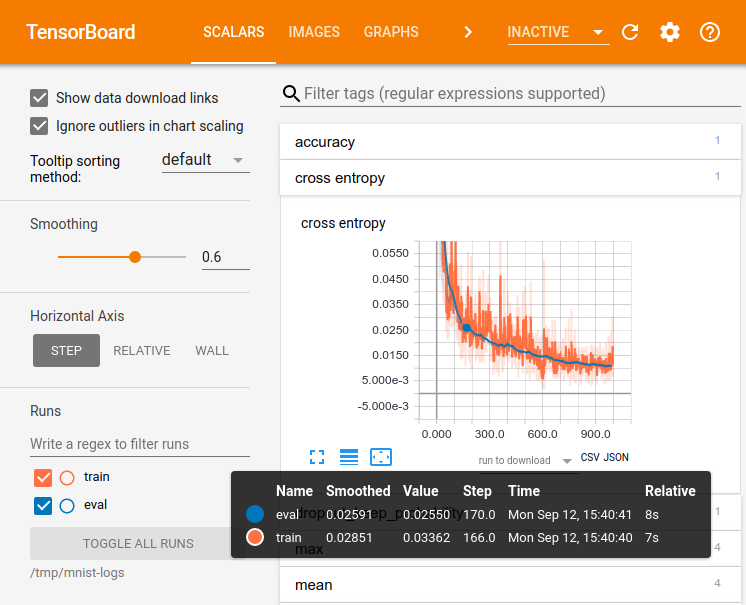

TensorBoard is a Python-based web app that reads log data generated by TensorFlow as it trains a network. With TensorBoard you can visualize your network, understand what's happening with learning and error rates, and gain lots of insight into what's actually going on with your training runs. There's an excellent video from this year's TensorFlow Dev Summit (more videos at that link) showing a lot of the cool things that are possible.

A Bug in TensorBoard

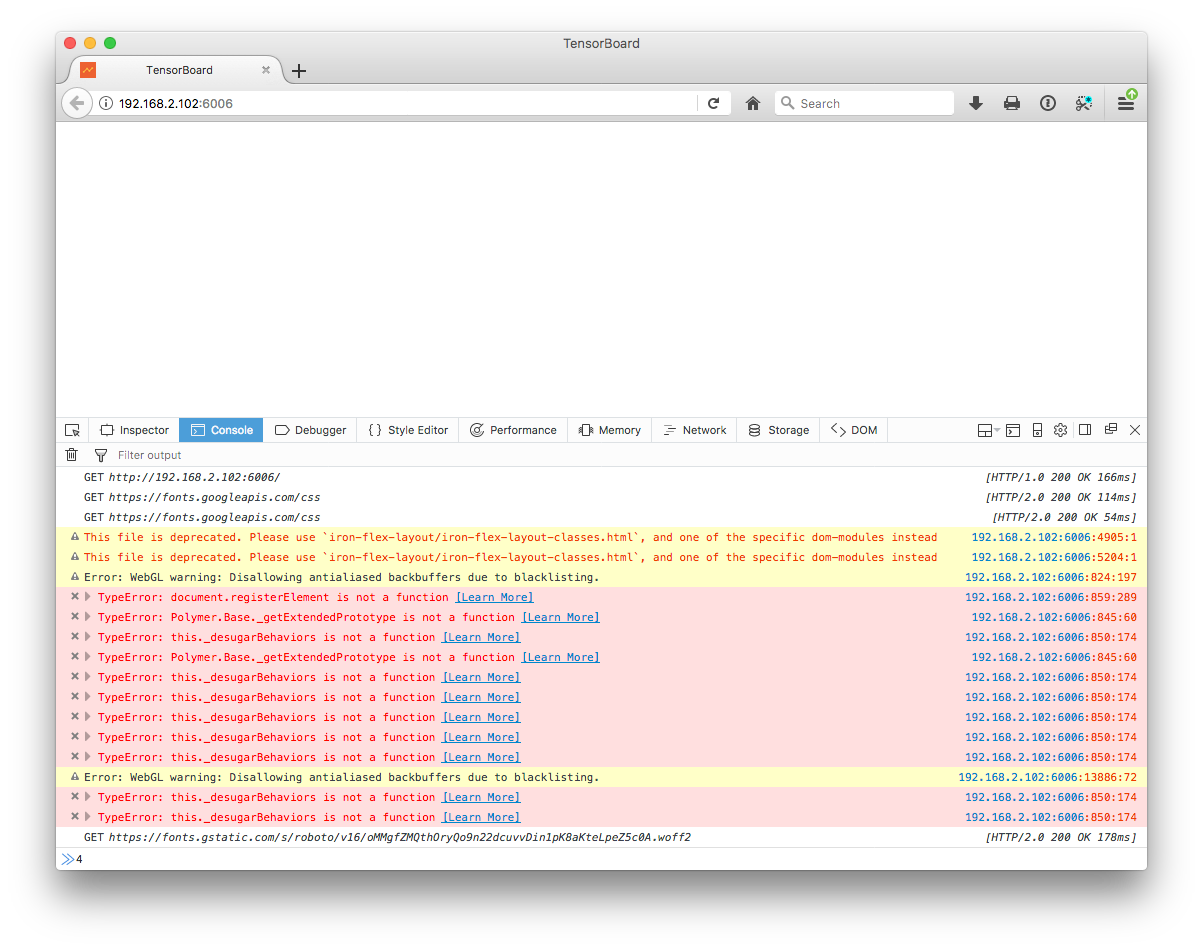

When I started using TensorFlow and TensorBoard this spring, I immediately hit a bug. My default browser is Firefox, and here's what I saw when I tried to view TensorBoard locally:

Notice all the errors in the console related to Polymer and document.registerElement not being a function. It looks like an issue with missing support for Custom Elements. In Chrome, everything worked fine, so I used that while I was iterating on my neural network training.

Now, since I have some time, I thought I'd go back and see if this was fixable. The value of having the TensorBoard UI be web based is that you should be able to use it in all sorts of contexts, and in all sorts of browsers.

Finding/Filing the Bug

My first step was to see if this bug was known. If someone has already filed it, then I won't need to; it may even be that someone is already fixing it, or that it's fixed in an updated version.

I begin by looking at the TensorBoard repo's list of Issues. As I said above, one of the amazing things about true open source projects is that more than just the code is open: so too is the process by which the code evolves in the form of bugs being filed/fixed. Sometimes we can obtain a copy of the source for a piece of software, but we can't participate in its development and maintenance. It's great that Google has put both the code and entire project on GitHub.

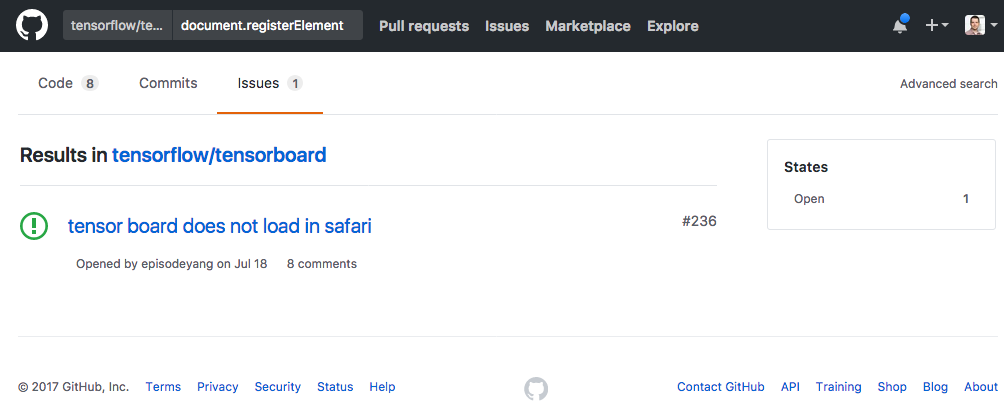

At the time of writing, there are only 120 open issues, so one strategy would be to just look through them all for my issue. This often won't be possible, though, and a better approach is to search the repo for some unique string. In this case, I have a bunch of error messages that I can use for my search.

I search for document.registerElement and find 1 issue, which is a lovely outcome:

Issue #236: tensor board does not load in safari is basically what I'm looking for, and discusses the same sorts of errors I saw in Firefox, but in the context of Safari.

Lesson: often a bug similar to your own is already filed, but may be hiding behind details different from the one you want to file. In this case, you might unknowingly file a duplicate (dupe), or add your information to an existing bug. Don't be afraid to file the bug: it's better to have it filed in duplicate than for it to go unreported.

Forking and Cloning the repo

Now that I've found Issue #236, I have a few options. First, I might decide that having this bug filed is enough: someone on the team can fix it when they have time. Another possibility is that I might have found that someone was already working on a fix, and a Pull Request was open for this Issue, with code to address the problem. A third option is for you to fix the bug yourself, and this is the route I want to go now.

My first step is to Fork the TensorBoard repo into my own GitHub account. I need a version of the code that I can modify vs. just read.

Once that completes, I'll have an exact copy of the TensorBoard repo that I control, and which I can modify. This copy lives on GitHub. To work with it on my laptop, I'll need to Clone it to my local computer as well, so that I can make and test changes

Setting up TensorBoard locally

I have no idea how to run TensorBoard from source vs. as part of my TensorFlow installation. I begin by reading their README.md file. In it I notice a useful discussion within the Usage section, which talks about how to proceed. First I'll need to install Bazel.

Lesson: in almost every case where you'll work on a bug in a new project, you'll be asked to install and setup a development environment different from what you already have/know. Take your time with this, and don't give up too easily if things don't go as smoothly as you expect: many fewer people test this setup than do the resulting project it is meant to create.

Bazel is a build/test automation tool built and maintained by Google. It's available for many platforms, and there are good instructions for installing it on your particular OS. I'm on macOS, so I opt for the Homebrew installation. This requires Java, which I also install.

Now I'm able to try and do the build I follow the instructions in the README, and within a few seconds get an error:

$ cd tensorboard

$ bazel build tensorboard:tensorboard

Extracting Bazel installation...

.............

ERROR: /private/var/tmp/_bazel_humphd/d51239168182c03bedef29cd50a9c703/external/local_config_cc/BUILD:49:5: in apple_cc_toolchain rule @local_config_cc//:cc-compiler-darwin_x86_64: Xcode version must be specified to use an Apple CROSSTOOL.

ERROR: Analysis of target '//tensorboard:tensorboard' failed; build aborted.

INFO: Elapsed time: 8.965s

This error is a typical example of the kind of problem one encounters working on a new project. Specifically, it's OS specific, and relates to a first-time setup issue--I don't have XCode setup properly.

I spend a few minutes searching for a solution. I look to see if anyone has filed an issue with TensorBoard on GitHub specifically about this build error--maybe someone has had this problem before, and it got solved? I also Google to see if anyone has blogged about it or asked on StackOverflow: you are almost never the only person who has hit a problem.

I find some help on StackOverflow, which suggests that I don't have XCode properly configured (I know it's installed). It suggests some commands I can try to fully configure things, none of which solve my issue.

It looks like it wants the full version of XCode vs. just the commandline tools. The full XCode is massive to download, and I don't really want to wait, so I do a bit more digging to see if there is any other workaround. This may turn out to be a mistake, and it might be better to just do the obvious thing instead of trying to find a workaround. However, I'm willing to spend an additional 20 minutes of research to save hours of downloading.

Some more searching reveals an interesting issue on the Bazel GitHub repo. Reading through the comments on this issues, it's clear that lots of other people have hit this--it's not just me. Eventually I read this comment, with 6 thumbs-up reactions (i.e., some agreement that it works):

just for future people.

sudo xcode-select -s /Applications/Xcode.app/Contents/Developercould do the the trick if you install Xcode and bazel still failing.

This allows Bazel to find my compiler and the build to proceed further...before stopping again with a new error: clang: error: unknown argument: '-fno-canonical-system-headers'.

This still sounds like a setup issue on my side vs. something in the TensorBoard code, so I keep reading. This discussion on the Bazel Google Group seems useful: it sounds like I need to clean my build and regenerate things, now that my XCode toolchain is properly setup. I do that, and my build completes without issue.

Lesson: getting this code to build locally required me to consult GitHub, StackOverflow, and Google Groups. In other words, I needed the community to guide me via asking and answering questions online. Don't be afraid to ask questions in public spaces, since doing so leaves traces for those who will follow in your footsteps.

Running TensorBoard

Now that I've built the source, I'm ready to try running it. TensorBoard is meant to be used in conjunction with Tensorflow. In this case, however, I'm interested in using it on its own, purely for the purpose of reproducing my bug, and testing a fix. I don't actually

care about having Tensorflow and real training data to visualize. I notice that the DEVELOPMENT.md file seems to indicate that it's possible to fake some training data and use that in the absence of a real TensorFlow project. I try what it suggests, which fails:

...

line 40, in create_summary_metadata

metadata = tf.SummaryMetadata(

AttributeError: 'module' object has no attribute 'SummaryMetadata'

ERROR: Non-zero return code '1' from command: Process exited with status 1.

From having programmed with TensorFlow before, I assume here that tf (i.e. the TensorFlow Python module) is missing an expected attribute, namely, SummaryMetadata. I've never heard of it, but Google helps me locate the necessary API docs.

This leads me to conclude that my installed version of TensorFlow (I installed it 4 months earlier) might not have this new API, and the code in TensorBoard now expects it to exist. The API docs I'm consulting are for version 1.3 of the TensorFlow API. What do I have installed?

$ pip search tensorflow

...

INSTALLED: 1.2.1

LATEST: 1.3.0

Maybe upgrading from 1.2.1 to 1.3.0 will solve this? I update my laptop to TensorFlow 1.3.0 and am now able to generate the fake data for TensorBoard.

Lesson: running portions of a larger project in isolation often means dealing with version issues and manually installing dependencies. Also, sometimes dependencies are assumed, as was TensorFlow 1.3 in this case. Likely the TensorBoard developers all have TensorFlow installed and/or are developing it at the same time. In cases like this a README may not mention all the implied dependencies.

Using this newly faked data, I try running my version of TensorBoard...which again fails with a new error:

...

from tensorflow.python.debug.lib import grpc_debug_server

ImportError: cannot import name grpc_debug_server

ERROR: Non-zero return code '1' from command: Process exited with status 1.

After some more searching, I find a 10-day old open bug in TensorBoard itself. This particular bug seems to be another version skew issue between dependencies, TensorFlow, and TensorBoard. The module in question, grpc_debug_server, seems to come from TensorFlow. Looking at the history of this file, the code is pretty new, making me wonder if it is once again that I'm running something with an older API. A comment in this issue gives a clue as to a possible fix:

FYI, I ran into the same problem, and I did

pip install grpcwhich seemed to fix the problem.

I give this a try, but TensorBoard still won't run. Further on in this issue I read another comment indicating I need the "nightly version of TensorFlow." I've never worked with the nightly version of TensorFlow before (didn't know such a thing existed), and I have no idea how to install that (the comment assumes one knows how to do this).

A bit more searching reveals the answer, and I install the nightly version:

$ pip install tf-nightly

Once again I try running my TensorBoard, and this time, it finally works.

Lesson: start by assuming that an error you're seeing has been encountered before, and go looking for an existing issue. If you don't find anything, maybe you are indeed the first person to hit it, in which

case you should file a new issue yourself so you can start a discussion and work toward a fix. Everyone hits these issues. Everyone needs help.

Reproducing the Bug

With all of the setup now behind us, it's time to get started on our actual goal. My first step in tackling this bug is to make sure I can reproduce it, that is, make sure I can get TensorBoard to fail in Safari and Firefox. I also want to confirm that things work in Chrome, which would give me some assurance that I've got a working source build.

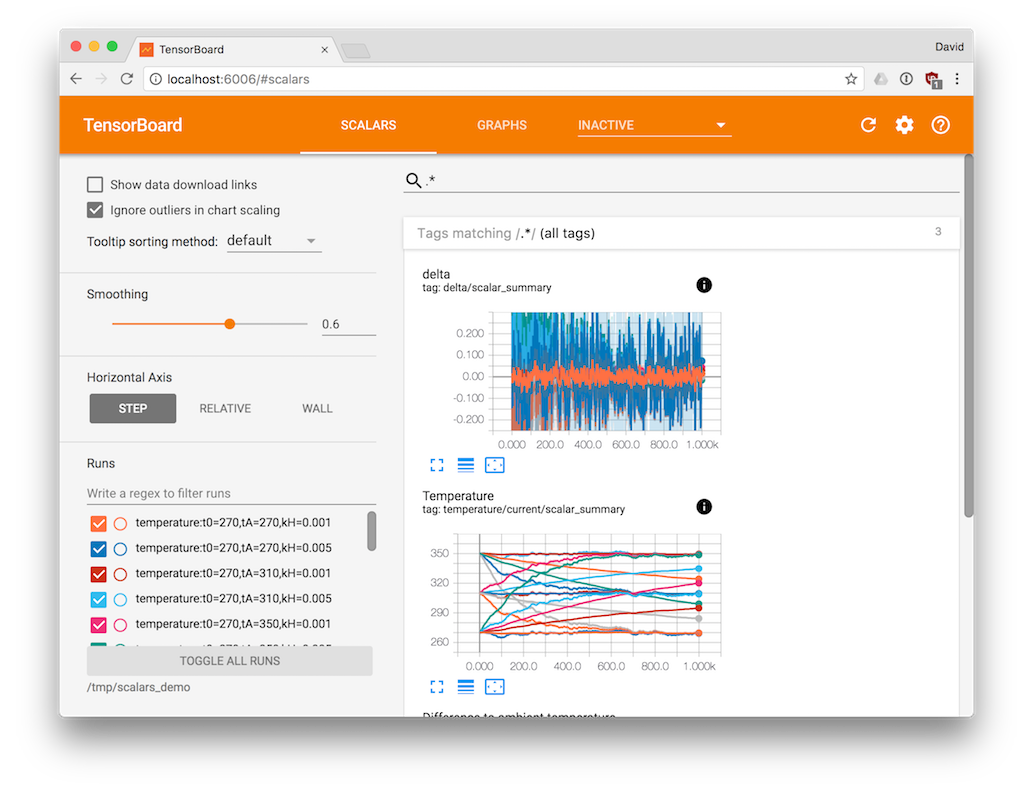

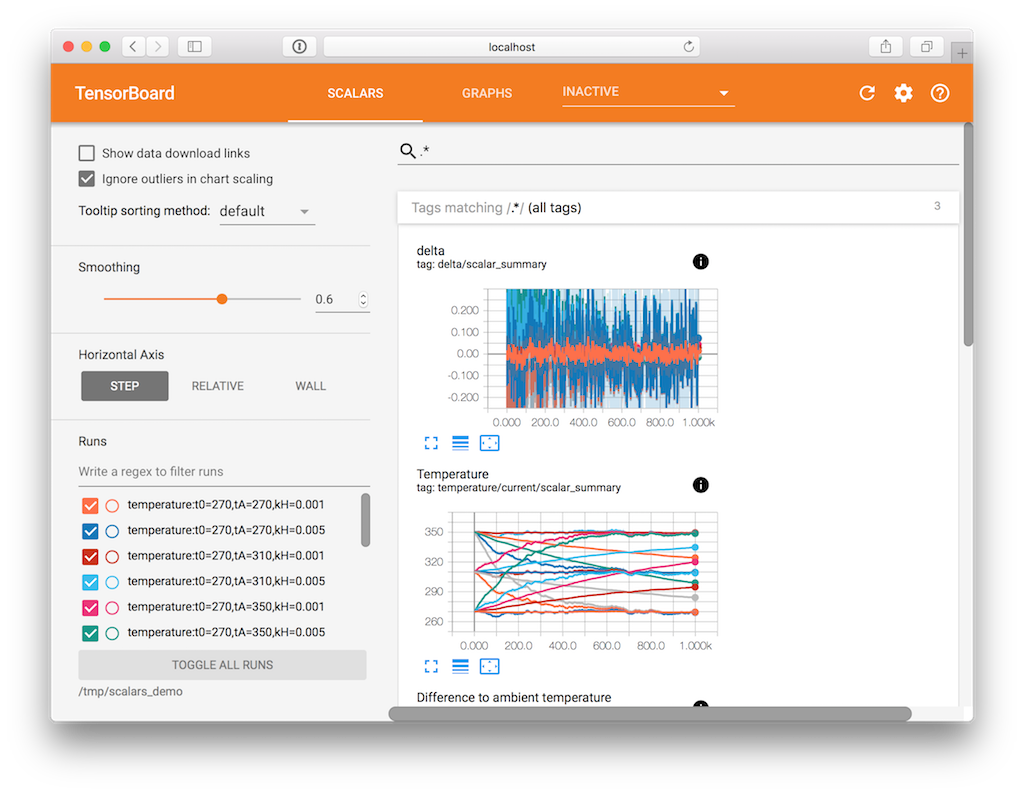

Here's my local TensorBoard running in Chrome:

Next I try Safari:

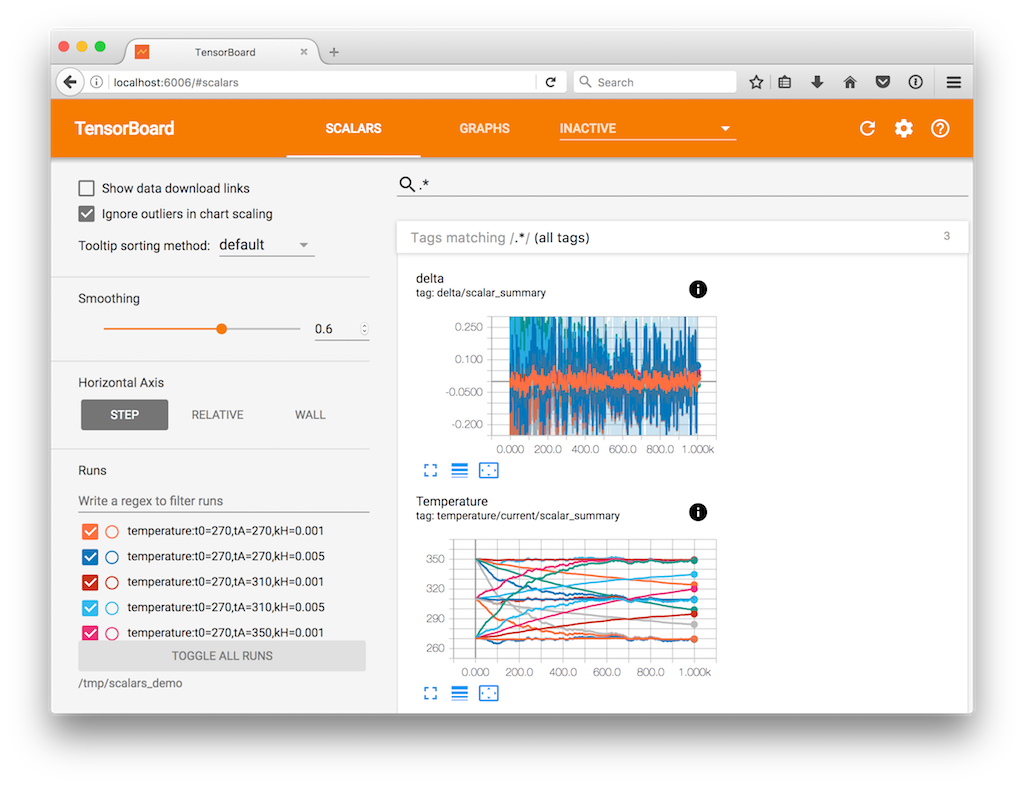

And...it works? I try Firefox too:

And this works too. At this point I have two competing emotions:

- I'm pleased to see that the bug is fixed.

- I'm frustrated that I've done all this work to accomplish nothing--I was hoping I could fix it.

The Value of Triaging Bugs

It's kind of ironic that I'm upset about this bug being fixed: that's the entire point of my work, right? I would have enjoyed getting to try and fix this myself, to learn more about the code, to get involved in the project. Now I feel like I have nothing to contribute.

Here I need to challenge my own feelings (and yours too if you're agreeing with me). Do I really have nothing to offer after all this work? Was it truly wasted effort?

No, this work has value, and I have a great opportunity to contribute something back to a project that I love. I've been able to discover that a previous bug has been unknowingly fixed, and can now be closed. I've done the difficult work of Confirming and Triaging a bug, and helping the project to close it.

I leave a detailed comment with my findings. This then causes the bug to get closed by a project member with the power to do so.

So the result of my half-day of fighting with TensorBoard is that a bug got closed. That's a great outcome, and someone needed to do this work in order for this to happen. My willingness to put some effort into it was key. It's also paved the way for me to do follow-up work, if I choose: my computer now has a working build/dev environment for this project. Maybe I will work on another bug in the future.

There's more to open source than fixing bugs: people need to file them, comment on them, test them, review fixes, manage them through their lifetime, close them, etc. We can get involved in any/all of these steps, and it's important to realize that your ability to get involved is not limited to your knowledge of how the code works.